-

PDF

- Split View

-

Views

-

Cite

Cite

Chloé Stoll, Richard Palluel-Germain, Roberto Caldara, Junpeng Lao, Matthew W G Dye, Florent Aptel, Olivier Pascalis, Face Recognition is Shaped by the Use of Sign Language, The Journal of Deaf Studies and Deaf Education, Volume 23, Issue 1, January 2018, Pages 62–70, https://doi.org/10.1093/deafed/enx034

Close - Share Icon Share

Abstract

Previous research has suggested that early deaf signers differ in face processing. Which aspects of face processing are changed and the role that sign language may have played in that change are however unclear. Here, we compared face categorization (human/non-human) and human face recognition performance in early profoundly deaf signers, hearing signers, and hearing non-signers. In the face categorization task, the three groups performed similarly in term of both response time and accuracy. However, in the face recognition task, signers (both deaf and hearing) were slower than hearing non-signers to accurately recognize faces, but had a higher accuracy rate. We conclude that sign language experience, but not deafness, drives a speed–accuracy trade-off in face recognition (but not face categorization). This suggests strategic differences in the processing of facial identity for individuals who use a sign language, regardless of their hearing status.

Face processing in deafness

Humans are said to be experts at recognizing faces, and some existing evidences strongly suggest an important role for visual experience in the development of this expertise (Haist, Adamo, Han, Lee, & Stiles, 2013). Adult expertise in face recognition is indeed not attained by all individuals, such as congenital cataract-reversal patients whose early visual impairment prevents a full development of face processing (Le Grand, Mondloch, Maurer, & Brent, 2001). There is also evidence that visual cognition more generally may also be affected by atypical sensory experience. For example, early profound deafness leads to highly specific enhancements in visual processing (for reviews see Bavelier, Dye, & Hauser, 2006; Pavani & Bottari, 2012), with face processing also being affected in deaf individuals.

In deaf communication faces have a special status. In sign language, facial expression provides not only emotional but also grammatical and syntactic markers (Brentari & Crossley, 2002; Liddell, 2003; Reilly, Mcintire, & Seago, 1992; Reilly & Bellugi, 1996). The face and its expressions can substitute in some way the voice tone and a same sign or a sentence can have a different meaning or can be nuanced according to the facial expression. Therefore communication by sign language in deaf or hearing people requires a specific attention, which also might impact the way these populations process faces. Studies have observed that deaf signers do not explore faces in the same way than hearing non-signers (Watanabe, Matsuda, Nishioka, & Namatame, 2011). For instance, while Japanese hearing non-signers spent more time fixating the nose region in an emotional valence rating task, Japanese deaf signers focused more on the eyes. As a consequence, it is relevant to determine how face processing differs between deaf signers, hearing signers, and hearing non-signers.

Following this idea, Arnold and Murray (1998) found that deaf signers are better than hearing signers at face matching, but not at non-face object matching. Bettger, Emmorey, McCullough, and Bellugi (1997) reported that both deaf native signers and hearing native signers (hearing person born to deaf parents) are better to discriminate face photographs under different views and lighting conditions than hearing non-signers. A more recent study (de Heering, Aljuhanay, Rossion, & Pascalis, 2012) compared the performance of deaf signers and hearing non-signers using the face inversion effect (i.e., the fact that a face is recognized better upright than inverted), and the composite face effect (i.e., a visual illusion in which two identical top parts of a face are perceived as slightly different if their respective bottom part belongs to different identities). The authors reported longer response times to match inverted faces for the deaf signers compared to the hearing non-signers. In the same time, deaf signers were slower to recognize a half part of a face, but they were as sensitive as hearing non-signers to the composite face effect. These latter findings suggest that deaf signers may develop different ways of face processing by increasing dependence on the canonical orientation of the face. This idea is supported by the recent results from He, Xu, and Tanaka (2016), who assessed the face inversion effect in deaf signers and hearing non-signers with a Face Dimension Task. This task allowed to determine which part of the face was crucial in the face inversion effect by changing facial details either in the eye or in the mouth area. They observed that the deaf signers was less affected by the face inversion effect than hearing non-signers when the changes occurred in the mouth area. It is worth noting that these differences were observed only for faces and not for other visual objects. de Heering et al. (2012), compared faces or cars recognition in deaf signers and hearing non-signers and also observed differences only for faces (but not for cars).

Collectively, these studies suggest that early deafness associated to sign language may lead to subtle changes in face processing. Emmorey (2001) postulated a specific enhancement for face processing for the aspects directly tied to sign language constraints. For example, memory for unfamiliar faces in a memory task with a delay of 3 min (unnecessary for conversation) was not different between deaf early signers and hearing non-signers (McCullough & Emmorey, 1997). On the contrary, a task that required discrimination or recognition of small changes in a face leads to differences between deaf signers and hearing non-signers (Bettger et al., 1997; McCullough & Emmorey, 1997). Somewhat related, deaf signers are also more sensitive than hearing non-signers when changes occur around the mouth—a highly informative area in sign language (He et al., 2016; Letourneau & Mitchell, 2011; McCullough & Emmorey, 1997). Altogether, these findings suggest that signers should be able to notice small differences in a face to identify and interpret the correct meaning of an expression. Yet the role of deafness and sign language experience on different aspects of face processing remains unclear.

Face Categorization and Face Recognition

Face perception occurs at several and distinct hierarchical levels in a coarse-to-fine process (Besson et al., 2017). Processing faces at a superordinate level (e.g., mammalian face processing) includes many relatively high-level categories of faces such as human and monkey faces. It is an easy process, implying fast response times and high accuracy (Besson et al., 2017). The face is, from the first hours of life, a very specific perceptual signal. Newborns are more attracted by faces (or face-like stimuli) than by other visual stimuli (Farroni et al., 2005; Johnson, Dziurawiec, Ellis, & Morton, 1991; Mondloch et al., 1999). These observations presuppose the existence of a mechanism, available at birth, for the extraction and processing of facial perceptual invariants such as two eyes, one nose, and one mouth in a specific spatial organization. Moreover, the face also benefits from specific brain processing in the fusiform face area located in the fusiform gyrus of the temporal lobe and typically lateralized to the right hemisphere (Kanwisher, Mcdermott, & Chun, 1997). Categorization of faces as distinct from other types of visual stimulation is one of the first stages of face processing. For example, people with prosopagnosia can distinguish faces from among other visual stimuli but they cannot recognize them (Caldara et al., 2005; Rossion et al., 2003).

The superordinate processing of face categorization is essential for face processing and it is less sensitive to experience relative to subordinate levels, at least in adults. The subordinate levels occur after face categorization has taken place, and involve more refined differentiation among face types within a superordinate category (e.g., race, gender, and age of human faces). The most subordinate level of processing involves the recognition of an individual’s identity from facial information. There is a range of evidence suggesting the importance of visual experience in the recognition of individuals’ faces provided, for example, by studies with congenital cataract-reversal patients whose early visual impairments prevent typical development of face processing mechanisms (Le Grand et al., 2001). The influence of visual experience on face processing ability is also observed in typical adults with the other race effect that is the fact that we are better to recognize faces from a familiar racial background than from a non-familiar one (Caldara & Abdi, 2006; Vizioli, Foreman, Rousselet, & Caldara, 2010; Vizioli, Rousselet, & Caldara, 2010).

Information about how deafness and or sign language could affect categorization (a superordinate function) or recognition (a subordinate function) of faces are currently lacking. To our knowledge no study has focused on the early stages of face processing or compared different level of face processing in the same group of deaf and signers participants. This latter issue is important because a change at superordinal level (i.e., first stage of a hierarchical processing) could induce some changes in more complex level of face processing (i.e., subordinate level). It is therefore possible that past results in face processing in deaf signers can be explained by a modulation in superordinal level indirectly observed in tasks involving subordinate face processing.

The goal of the present study was (a) to determine whether both face categorization and face recognition are altered in early congenitally deaf adults, and if so (b) to disentangle the role of deafness and sign language in bringing about those alterations. To this end, we administered a face categorization task (human vs. non-human) and a face recognition task (match-to-sample under different viewing conditions) to deaf signers of French Sign Language (LSF), hearing signers of LSF, and hearing non-signers.There are potentially three sources of modulation: an effect of deafness alone, an effect of sign language alone regardless the hearing status, or an additive effect of deafness and sign language. If early profound deafness is the main cause of face processing differences observed in the past, deaf adults should differ from hearing adults regardless of their sign language experience. However, if sign language is the driving factor, then both deaf and hearing signers should exhibit evidenced of processing that differ from that of hearing non-signers. Finally, it is also possible that a combination of early profound deafness and sign language experience are required to bring about differences in face processing, in which case deaf signers should differ from both hearing signers and hearing non-signers, and hearing signers should also differ from hearing non-signers. As mentioned before, the superordinate level of face processing is present nearly from birth whereas the subordinate level depends on (visual) experience. Consequently, we can predict that face categorization will be less affected by hearing status sign langage experience than face recognition.

Method

Participants

About 19 hearing non-signers, 15 hearing signers, and 19 early profoundly deaf signers participated in the experiment. None had a history of neurological disorder or reported a non-corrected visual impairment. All participants reported not playing action video games. They gave their written consent and were paid for their participation. This study was approved by the local ethic committee (CERNI 2013-12-24–32).

The 19 hearing non-signers (Mage = 29.6, SDage = 8.6, range = 20–45) had no experience with any sign language, and were recruited via an electronic platform for research in cognitive science in Grenoble, France.

Among the 15 hearing signers (Mage = 40.2; SDage = 12.3, range = 22–63) 1 participant was a native hearing signer born to deaf parents with LSF as first language. The 14 other hearing signers had learned LSF as adults (Mage of acquisition = 27.0; SDage of acquisition = 10.17, range = 16–50), and had actively signed for between 4 and 28 years (M = 15.1; SD = 6.5) with a frequency of between 7 and 50 hr per week (M = 23.7; SD = 12.0). They estimated their French Sign Language (LSF) fluency on a scale from 1 (no LSF knowledge) to 5 (perfectly fluent in LSF). The mean reported LSF fluency was 4.7 (SD = 0.4; range = 3.5–5), see Table 1. These adult learners were either professional French-LSF interpreters or support service professionals for deaf people in healthcare from the Health Care Unit for the Deaf of the Grenoble Hospital (France).

LSF experience in the hearing signers group

| ## . | Age . | Age of first LSF exposure (y/o) . | Frequency of signing (hours/week) . | Self-rated LSF proficiency . |

|---|---|---|---|---|

| 1 | 22 | Birth | 2 | 5 |

| 2 | 28 | 19 | 30 | 4.5 |

| 3 | 41 | 19 | 20 | 5 |

| 4 | 31 | 26 | 20 | 4.5 |

| 5 | 60 | 42 | 15 | 5 |

| 6 | 40 | 37 | 7 | 4.5 |

| 7 | 37 | 22 | 35 | 4.5 |

| 8 | 53 | 35 | 8 | 3.5 |

| 9 | 63 | 50 | 50 | 5 |

| 10 | 56 | 28 | 12 | 5 |

| 11 | 32 | 20 | 20 | 5 |

| 12 | 31 | 16 | 35 | 5 |

| 13 | 39 | 23 | 30 | 4.5 |

| 14 | 39 | 20 | 30 | 5 |

| 15 | 32 | 21 | 20 | 5 |

| ## . | Age . | Age of first LSF exposure (y/o) . | Frequency of signing (hours/week) . | Self-rated LSF proficiency . |

|---|---|---|---|---|

| 1 | 22 | Birth | 2 | 5 |

| 2 | 28 | 19 | 30 | 4.5 |

| 3 | 41 | 19 | 20 | 5 |

| 4 | 31 | 26 | 20 | 4.5 |

| 5 | 60 | 42 | 15 | 5 |

| 6 | 40 | 37 | 7 | 4.5 |

| 7 | 37 | 22 | 35 | 4.5 |

| 8 | 53 | 35 | 8 | 3.5 |

| 9 | 63 | 50 | 50 | 5 |

| 10 | 56 | 28 | 12 | 5 |

| 11 | 32 | 20 | 20 | 5 |

| 12 | 31 | 16 | 35 | 5 |

| 13 | 39 | 23 | 30 | 4.5 |

| 14 | 39 | 20 | 30 | 5 |

| 15 | 32 | 21 | 20 | 5 |

LSF experience in the hearing signers group

| ## . | Age . | Age of first LSF exposure (y/o) . | Frequency of signing (hours/week) . | Self-rated LSF proficiency . |

|---|---|---|---|---|

| 1 | 22 | Birth | 2 | 5 |

| 2 | 28 | 19 | 30 | 4.5 |

| 3 | 41 | 19 | 20 | 5 |

| 4 | 31 | 26 | 20 | 4.5 |

| 5 | 60 | 42 | 15 | 5 |

| 6 | 40 | 37 | 7 | 4.5 |

| 7 | 37 | 22 | 35 | 4.5 |

| 8 | 53 | 35 | 8 | 3.5 |

| 9 | 63 | 50 | 50 | 5 |

| 10 | 56 | 28 | 12 | 5 |

| 11 | 32 | 20 | 20 | 5 |

| 12 | 31 | 16 | 35 | 5 |

| 13 | 39 | 23 | 30 | 4.5 |

| 14 | 39 | 20 | 30 | 5 |

| 15 | 32 | 21 | 20 | 5 |

| ## . | Age . | Age of first LSF exposure (y/o) . | Frequency of signing (hours/week) . | Self-rated LSF proficiency . |

|---|---|---|---|---|

| 1 | 22 | Birth | 2 | 5 |

| 2 | 28 | 19 | 30 | 4.5 |

| 3 | 41 | 19 | 20 | 5 |

| 4 | 31 | 26 | 20 | 4.5 |

| 5 | 60 | 42 | 15 | 5 |

| 6 | 40 | 37 | 7 | 4.5 |

| 7 | 37 | 22 | 35 | 4.5 |

| 8 | 53 | 35 | 8 | 3.5 |

| 9 | 63 | 50 | 50 | 5 |

| 10 | 56 | 28 | 12 | 5 |

| 11 | 32 | 20 | 20 | 5 |

| 12 | 31 | 16 | 35 | 5 |

| 13 | 39 | 23 | 30 | 4.5 |

| 14 | 39 | 20 | 30 | 5 |

| 15 | 32 | 21 | 20 | 5 |

The 19 deaf participants (Mage = 33.5, SDage = 8.05, range = 21–49) were all characterized by a profound hearing loss (>90 dB, based on self-report). They reported their onset of hearing loss to range from birth to the first year of life. The mean reported LSF fluency was 4.0 (SD = 1.0; range = 2–5). Demographic details of the group are reported in Table 2. Instructions were given in writing, orally or in LSF by video, depending on each participant’s preference. LSF videos were recorded with a professional French-LSF interpreter.

Demographic information about the deaf group. The * in the hearing support row indicates that the participant does not use hearing support anymore; CI = Cochlear Implant

| ## . | Age . | Hearing support . | Age of first LSF exposure (y/o) . | Self-rated LSF proficiency . | First language . |

|---|---|---|---|---|---|

| 1 | 38 | Hearing aid | 16 | 2 | French |

| 2 | 31 | Hearing aid | 4 | 4 | LSF |

| 3 | 24 | Hearing aid + CI | 15 | 5 | French |

| 4 | 25 | None | 7 | 4 | LSF |

| 5 | 25 | Hearing aid | 5 | 4,5 | French |

| 6 | 43 | Hearing aid | 23 | 3 | French |

| 7 | 37 | Hearing aid + CI | 20 | 3 | French |

| 8 | 29 | Hearing aid* | Birth | 5 | LSF |

| 9 | 30 | Hearing aid | 15 | 5 | French |

| 10 | 30 | Hearing aid | 21 | 3 | French |

| 11 | 42 | Hearing aid + CI | 25 | 3 | French |

| 12 | 42 | Hearing aid | 12 | 4,5 | LSF |

| 13 | 49 | Hearing aid | 20 | 5 | LSF |

| 14 | 42 | CI* | 5 | 5 | LSF |

| 15 | 33 | CI* | 5 | 2 | French |

| 16 | 21 | Hearing aid | Birth | 4 | French |

| 17 | 27 | CI* | 16 | 4,5 | French |

| 18 | 28 | Hearing aid | 6 | 5 | LSF |

| 19 | 42 | Hearing aid | 5 | 5 | LSF |

| ## . | Age . | Hearing support . | Age of first LSF exposure (y/o) . | Self-rated LSF proficiency . | First language . |

|---|---|---|---|---|---|

| 1 | 38 | Hearing aid | 16 | 2 | French |

| 2 | 31 | Hearing aid | 4 | 4 | LSF |

| 3 | 24 | Hearing aid + CI | 15 | 5 | French |

| 4 | 25 | None | 7 | 4 | LSF |

| 5 | 25 | Hearing aid | 5 | 4,5 | French |

| 6 | 43 | Hearing aid | 23 | 3 | French |

| 7 | 37 | Hearing aid + CI | 20 | 3 | French |

| 8 | 29 | Hearing aid* | Birth | 5 | LSF |

| 9 | 30 | Hearing aid | 15 | 5 | French |

| 10 | 30 | Hearing aid | 21 | 3 | French |

| 11 | 42 | Hearing aid + CI | 25 | 3 | French |

| 12 | 42 | Hearing aid | 12 | 4,5 | LSF |

| 13 | 49 | Hearing aid | 20 | 5 | LSF |

| 14 | 42 | CI* | 5 | 5 | LSF |

| 15 | 33 | CI* | 5 | 2 | French |

| 16 | 21 | Hearing aid | Birth | 4 | French |

| 17 | 27 | CI* | 16 | 4,5 | French |

| 18 | 28 | Hearing aid | 6 | 5 | LSF |

| 19 | 42 | Hearing aid | 5 | 5 | LSF |

Demographic information about the deaf group. The * in the hearing support row indicates that the participant does not use hearing support anymore; CI = Cochlear Implant

| ## . | Age . | Hearing support . | Age of first LSF exposure (y/o) . | Self-rated LSF proficiency . | First language . |

|---|---|---|---|---|---|

| 1 | 38 | Hearing aid | 16 | 2 | French |

| 2 | 31 | Hearing aid | 4 | 4 | LSF |

| 3 | 24 | Hearing aid + CI | 15 | 5 | French |

| 4 | 25 | None | 7 | 4 | LSF |

| 5 | 25 | Hearing aid | 5 | 4,5 | French |

| 6 | 43 | Hearing aid | 23 | 3 | French |

| 7 | 37 | Hearing aid + CI | 20 | 3 | French |

| 8 | 29 | Hearing aid* | Birth | 5 | LSF |

| 9 | 30 | Hearing aid | 15 | 5 | French |

| 10 | 30 | Hearing aid | 21 | 3 | French |

| 11 | 42 | Hearing aid + CI | 25 | 3 | French |

| 12 | 42 | Hearing aid | 12 | 4,5 | LSF |

| 13 | 49 | Hearing aid | 20 | 5 | LSF |

| 14 | 42 | CI* | 5 | 5 | LSF |

| 15 | 33 | CI* | 5 | 2 | French |

| 16 | 21 | Hearing aid | Birth | 4 | French |

| 17 | 27 | CI* | 16 | 4,5 | French |

| 18 | 28 | Hearing aid | 6 | 5 | LSF |

| 19 | 42 | Hearing aid | 5 | 5 | LSF |

| ## . | Age . | Hearing support . | Age of first LSF exposure (y/o) . | Self-rated LSF proficiency . | First language . |

|---|---|---|---|---|---|

| 1 | 38 | Hearing aid | 16 | 2 | French |

| 2 | 31 | Hearing aid | 4 | 4 | LSF |

| 3 | 24 | Hearing aid + CI | 15 | 5 | French |

| 4 | 25 | None | 7 | 4 | LSF |

| 5 | 25 | Hearing aid | 5 | 4,5 | French |

| 6 | 43 | Hearing aid | 23 | 3 | French |

| 7 | 37 | Hearing aid + CI | 20 | 3 | French |

| 8 | 29 | Hearing aid* | Birth | 5 | LSF |

| 9 | 30 | Hearing aid | 15 | 5 | French |

| 10 | 30 | Hearing aid | 21 | 3 | French |

| 11 | 42 | Hearing aid + CI | 25 | 3 | French |

| 12 | 42 | Hearing aid | 12 | 4,5 | LSF |

| 13 | 49 | Hearing aid | 20 | 5 | LSF |

| 14 | 42 | CI* | 5 | 5 | LSF |

| 15 | 33 | CI* | 5 | 2 | French |

| 16 | 21 | Hearing aid | Birth | 4 | French |

| 17 | 27 | CI* | 16 | 4,5 | French |

| 18 | 28 | Hearing aid | 6 | 5 | LSF |

| 19 | 42 | Hearing aid | 5 | 5 | LSF |

Materials and Procedure

Face categorization

In the face categorization task, participants were asked to decide whether the stimulus displayed at the center of the screen was a human or a non-human face. Stimuli were grayscale 562 × 762 pixel pictures (subtending 5.6° × 7.6° of visual angle) of 40 individuals (20 women, 20 men) with a neutral emotional expression, sourced from the KDEF faces database (Lundqvist, Flykt, & Öhman, 1998), and 40 mammals (10 each of cats, tigers, apes, and sheep). A fixation cross appeared for 500 ms and was replaced by the stimulus, which remained visible until the participants’ response or after 1,500 ms had elapsed. Participants were instructed to press one key if the stimulus was a human face, and another key for non-human faces. The A and P keys on a French keyboard were used, with allocation of those keys to response categories counterbalanced across participants. Each stimulus was presented three times for a total of 240 trials, administered in two blocks separated by a pause. Prior to the experimental session, participants had 10 trials practice session to become familiar with the task (these stimuli also appeared in the main experimental task).

Face recognition

The face recognition task was conducted using a classical short-term memory two-alternative forced choice task (2-AFC) similar to the one reported in Busigny and Rossion (2010) and de Heering et al. (2012). Each trial started with a central fixation cross (500 ms), which was replaced by a full-frontal face (i.e., the target) for 1,500 ms, and after an inter-trial interval of 250 ms, two 3/4 profile faces appeared. One of these faces was identical to the target and the second one was a same gender face distractor. The faces appeared side-by-side in the center of the screen with the “same face” location (left/right) randomized across trials. Faces remained on screen until the participants’ response or after 3,000 ms had elapsed. The participants were instructed to select the face with the same identity as the target, by pressing the key corresponding to the screen location of the stimulus (left arrow or right arrow) on the keyboard. Pictures were grayscale faces without external cues (hair, ears or accessories) of 12 women and 12 men. The faces subtended 4.6° × 6.2° of visual angle. In the recognition phase, the two faces were presented 8.9° to the left or right of the central fixation cross. There was a total of 48 trials, each face was twice the target face but the pair of faces presented in the recognition phase was never the same across trials (e.g., female face #1 could only have been associated with female face #2 in one trial). Before the experimental session, participants had a 5-trial practice session.

Participants performed the face categorization and recognition task in a random order. For both tasks, participants were instructed to respond as fast and as accurately as possible and received accuracy feedback during the practice session. The study lasted about 15 min. Both tasks were programmed with Eprime® 2.0 software (Psychology Software Tools, Inc.) and were run on a 19-inch laptop at a viewing distance of approximately 60 cm in a quiet and dimly lighted room.

Data Analysis

For the face categorization task we conducted a repeated measure ANOVA with the nature of the stimulus (i.e., human and no-human stimuli) as a within subject variable and the group (i.e., deaf signers, hearing signers, hearing non-signers) as a between subject variable for both response time (correct responses only) and accuracy. For the face recognition task we conducted an ANOVA for independent samples (i.e., deaf signers, hearing signers, hearing non-signers) for both response time (correct responses only) and accuracy.

On each task, in order to understand the respective role of sign language experience and deafness, we applied Helmert contrasts on each analysis (i.e., RTs and accuracy) with groups ranked in the following order: hearing non-signer, hearing signer, deaf signer. The first contrast was to assess the effect of sign language (hearing non-signer vs. both signers groups), and the second contrast was to assess the effect of deafness within signing groups (hearing signers vs. deaf signers). All reported p-values are two-tailed.

Results

Face Categorization

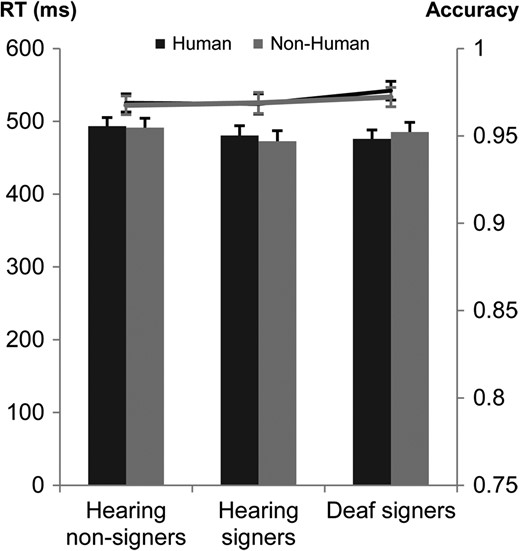

Because of technical problems, data from one deaf participant were not recorded. A further participant from the deaf signer group was excluded from analyses because she/he was considered as outlier with Studentized Deleted Residual (SDR) bigger than the calculated critical value (i.e., 3.26) for the RT variable, (Belsley, Kuh, & Welsch, 2005). Statistical analysis was therefore conducted with 19 hearing non-signers, 15 hearing signers, and 18 deaf signers. See Figure 1.

Mean RTs and accuracy in the face categorization task for hearing non-signers, hearing signers, and deaf signers. Response times are reported in columns, and accuracies in lines. Error bars represent standard error of the mean.

Age of participants

There was a significant age difference between groups, F(2,49) = 5.18, p = 0.01, η2p = 0.17, due to the fact that our hearing signers are older compared to hearing non-signers, F(1,49) = 10.30, p = 0.002, and to deaf signers, F(1,49) = 3.95, p = 0.052. We tested the effect of age on RT and accuracy. Both regression analyses were non-significant (p > 0.05), suggesting that age of participants had no significant effect on response time or accuracy.

Frequency of LSF use and age of acquisition

Due to an important variability in terms of frequency use of LSF within the hearing signers group and the age of acquisition of LSF within both hearing signers and deaf signers groups we tested their respective effect on RT and accuracy. Regression analyses were non-significant (p > 0.05), suggesting that the frequency of using LSF and the age of acquisition had no influence on response time or on accuracy.

Response time

The ANOVA did not reveal a significant effect of group, F(2,49) = 0.42, p = 0.66. Moreover, Helmert contrasts did not reveal any significant effect of sign language experience, t(49) = 0.58, p = 0.56, or hearing status, t(49) = −0.61, p = 0.54. In other words, the three groups had similar response times when correctly categorizing human and non-human faces. In addition, the analysis did not reveal significant RT differences for human and non-human stimuli, F(1,49) = 0.001, p = 0.98, nor a significant interaction between group and type of stimulus, F(2,49) = 1.81, p = 0.17.

Accuracy

The ANOVA did not reveal a significant effect of group, F(2,49) = 0.5, p = 0.59. Moreover, Helmert contrasts did not reveal any significant effect of sign language experience, t(49) = −0.57, p = 0.56, or hearing status, t(49) = −0.82, p = 0.41. In other words, the three groups did not significantly differ in their accuracy to categorize human and non-humans faces. Moreover, the analysis did not reveal significant difference between accuracy for human and non-human stimuli, F(1,49) = 0.2, p = 0.68, nor a significant interaction between group and type of stimulus, F(2,49) = 0.1, p = 0.89.

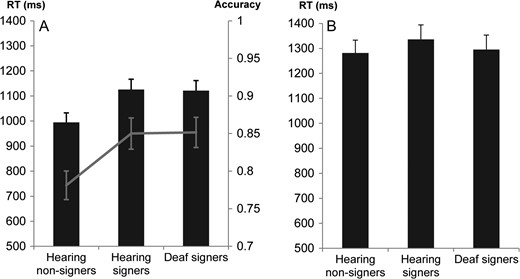

Face Recognition

Because of technical problems, data from one deaf participant were not recorded. A further participant from the hearing non-signer group was excluded from analyses because they were considered to be an outlier with an SDR larger than the calculated critical value (i.e., 3.26) for the RT variable (Belsley et al., 2005). Statistical analysis was conducted with 18 hearing non-signers, 15 hearing signers, and 18 deaf signers. See Figure 2A.

(A) Mean performance in the face recognition task for hearing non-signers, hearing signers, and deaf signers. Response times are reported in columns and accuracy in lines. (B) Mean Inverse Efficiency Score (IES) in the face recognition task for hearing non-signers, hearing signers, and deaf signers. Error bars represent standard error of the mean in both plots.

Age of participants

As for the face categorization task, we tested the effect of age of participants on RT and accuracy. Here again both regressions were non-significant (p > 0.05), suggesting that the age of participants had no effect on response time or on accuracy.

Frequency of LSF use and age of acquisition

As for the face categorization task, we tested the effect of frequency of using LSF and the age of acquisition on RT and accuracy. Here again both regressions were non-significant (p > 0.05), suggesting that the frequency of using LSF and the age of acquisition had no effect on response time or on accuracy.

Response time

While the ANOVA did not reveal a significant effect of group, F(2,48) = 2.98, p = 0.06, η2p = 0.11, planned Helmert contrasts revealed a significant effect of sign language experience, t(48) = −2.43, p = 0.019; η2p = 0.11, but no significant effect of hearing status, t(48) = 0.41; p = 0.69. Signers (deaf or hearing) were significantly slower to accurately recognize a face than hearing non-signers.

Accuracy

The ANOVA revealed a significant effect of group, F(2,48) = 4.84, p = 0.012, η2p = 0.17. Helmert contrasts showed a significant effect of sign language experience, t(48) = −3.09, p = 0.003; η2p = 0.17, but no significant effect of hearing status, t(48) = −0.23; p = 0.82. Signers (deaf or hearing) were significantly more accurate to recognize a face than hearing non-signers.

The analysis of the face recognition task suggested a speed–accuracy trade-off in signers. They were slower but more accurate than hearing non-signers on this task. We therefore carried out further statistical analyses on these data in order to understand better these differences and clearly determine whether there was speed–accuracy trade-off—a different cognitive strategy—in signers for face recognition. We first computed an Inverse Efficiency Score (IES) analysis and then modeled our data with a Bayesian hierarchical drift-diffusion (HDD) model.

IES analysis

Inverse Efficiency Score combines both RT and accuracy in the same score. It is a weighting of RTs as a function of accuracy (RT/ACC) (Bruyer & Brysbaert, 2011). A smaller IES indicate faster responses without sacrificing accuracy. The ANOVA for independent samples (i.e., groups) on IES did not reveal a significant group effect, F(2,48) = 0.34; p = 0.71. Moreover, Helmert contrasts did not reveal any significant effect of sign language experience, t(48) = 0.58, p = 0.56, or deafness, t(48) = −0.62, p = 0.54. See Figure 2B.

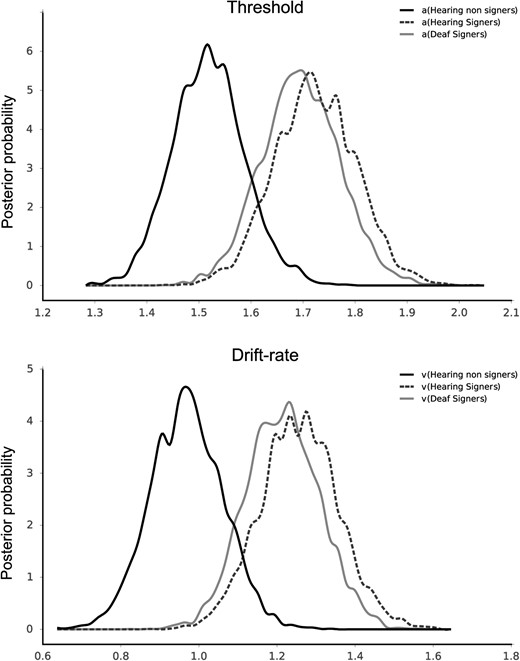

Bayesian HDD model

The HDD model describes participants’ decision behavior in two-choice decision tasks and extracts different paramaters to sum up decision-making process. The model postulates that in such dichotic decision tasks, individuals accumulate information over time until sufficient evidence for one response over the other one has been accumulated, resulting in a motor response. A first parameter is called the threshold (a) and corresponds to the quantity of information required to make a decision. The higher the threshold, the more evidence is required before responding. A difference in threshold therefore indicates a different strategy in individuals’ decision-making processes. A high threshold results in a more cautious response that takes a longer time and a low threshold results in more rapid but potentially error-prone responses. The second parameter is the drift-rate (v) and represents the speed of evidence accumulation. It is directly influenced by the quality of information extracted from the stimulus. The drift-rate and the threshold influence the decision time process, that is the reaction time component without the non-decision time processes such as stimulus encoding and motor response execution. We estimated threshold and drift-rate parameters for each group of observers using the hierarchical Bayesian estimation of the Drift-Diffusion Model (HDDM python package; Wiecki, Sofer, & Frank, 2013).1 Statistical analyses showed a higher drift-rate in the signers (deaf signers v = 1.21, [1.03, 1.40]; hearing signers v = 1.25, [1.04, 1.45]; numbers in square brackets indicate the 95% credible interval) compared to hearing non-signers (v = 0.97, [0.80, 1.15]; p = 0.029 and p = 0.017, respectively), while the deaf signers and the hearing signers did not differ from each other (p = 0.630). A similar pattern was also observed for the threshold. Deaf signers (a = 1.69, [1.55, 1.84]), and hearing signers (a = 1.72, [1.57, 1.87]) had higher thresholds than hearing non-signers (a = 1.52, [1.39, 1.66]; p = 0.040 and p = 0.027, respectively; p = 0.608 for the deaf signers vs. hearing signers comparison).

The HDD model suggests, therefore, that signers are faster to extract and accumulate information from the stimulus than non-signers (i.e., higher drift-rate) but they are also more conservative and precautious (i.e., higher threshold) in their responses than non-signers, see Figure 3.

Posteriors of threshold (upper plot) and drift-rate (lower plot) parameters for the hierarchical Bayesian estimation of the Drift-Diffusion Model in the face recognition task for hearing non-signers, hearing signers, and deaf signers

Discussion

The aim of the study was to disentangle the impact of early deafness and sign language experience on superordinate and subordinate levels of face processing. The superordinate level was tested via a face categorization task, tapping into the initial stages of face processing; this ability is argued to be less sensitive to age and experience than others stages like face recognition. By comparison, subordinate levels of face processing, here tested with a face recognition task, are known to be sensitive to visual experience and could be influenced either by early deafness or by sign language exposure.

As hypothesized, we did not observe any significant differences between signers and non-signers, or between deaf and hearing participants, in the face categorization task, with all three groups being as fast to categorize human faces over non-human faces—a process not tied to sign language constraints. In addition, the accuracy of the responses was high (hearing non-signers 96.8%, hearing signers 96.8%, deaf signers 97.2%) associated with fast RT which could reflect a ceiling. Categorizing human over non-human faces is an easy process but the task was designed to test and to rule out the existence of important differences in the early stage of face processing between our experimental groups. It was also a way to assess that a more general aspect of visual perception is not modulated neither by early deafness, sign language or by a combination of both deafness and sign language.

On the contrary, we observed that signers (both hearing and deaf) were slower but more accurate in recognizing faces compared to (hearing) non-signers. These differences cannot be accounted for the age difference between groups, as regressions between the age of the participants and reaction time and accuracy were not significant. They cannot neither be explained by changes in more general aspect of cognition in signers like visual perception, working memory, or visual attention since previous studies did not observe longer response time in (deaf) signers for detection time (Loke & Song, 1991), visual search (Rettenbach, Diller, & Sireteanu, 1999; Stivalet, Moreno, Richard, Barraud, & Raphel, 1998), or orienting attention (Heimler et al., 2015; Parasnis & Samar, 1985) in central visual field.

This speed/accuracy trade-off was further analyzed by first computing IESs (Bruyer & Brysbaert, 2011) and then by modeling the data using HDDM (Wiecki et al., 2013). Inverse Efficiency Score did not provide evidence for efficency difference between signers (both hearing and deaf) and hearing non-signers. But according to the estimated Drift-Diffusion Model parameters, signers accumulated information from the stimuli at a faster rate, but also set a higher—more conservative—threshold for making a response.

Our results support past research on the impact of sign language exposure on face processing, but instead of observing an enhancement we found a speed–accuracy trade-off that suggests a different strategy for face recognition in signers. This discrepancy could be explained by the fact that the previous studies did not analyze both accuracy and RTs in the same experiment, or by the nature of the task used to assess participants face processing. Arnold and Murray's study (1998) focused on speed to complete a face-matching task and Bettger et al. (1997) analyzed accuracy only. Furthermore, we tested face recognition abilities in a two-alternative forced choice paradigm whereas Arnold and Murray (1998) tested the subordinate level with a delayed face-matching task and Bettger et al. (1997) with a simultaneous face-matching task.

Our data suggest that sign language may shape subordinate levels of face processing, such as face recognition, regardless of hearing status. The face recognition task used in this study can be somewhat related to some sign language constraints as in signing communication, participants needed to pay attention to small details on the face to take a decision and react properly.

It is worth noting that a Mann–Whitney U test comparing hearing and deaf signers indicated that the hearing signers group (median = 5) ranked their LSF level higher than the deaf signers group (median = 4.5) (U = 87.00, p = 0.042, adjusted values for small samples). This difference could be interpreted in two different ways. First, it can be a manifestation of the Dunning–Kruger effect in hearing signers: A cognitive bias in which individuals with low abilities (here in LSF) tend to overestimate their abilities compared to real experts. Second, this higher self-estimated level of LSF skill in hearing signers could also reflect a real difference in ability. In fact, while all the hearing signers had received full training in LSF, some of the deaf signers were more comfortable with French than LSF. Moreover, the difference induced by sign language experience seems to not be restricted to exposure early in development since most of the hearing signer participants were late signers with a mean age of LSF exposure of 15 years. Fluency in LSF seems to be the key criterion in order to observe the difference in face recognition strategy since neither age of LSF acquisition (in hearing and deaf signers) neither frequency of LSF using (in hearing signers) were linked to response time or accuracy.

It is however, important to consider possible differences in the developmental trend that has led to such performances. If we did not observe significant correlation in adults’ signers between sign language acquisition and performances, it does not imply that early experience of sign language has not impact on face processing or other aspects of visual cognition. In their study on face recognition in deaf adults (Study 2), Bettger et al. (1997) did not observe differences between deaf of deaf parents (native signers) and deaf of hearing parents. Both groups outperformed hearing non-signers in discriminating and recognizing small changes in a face. In the developmental version of their study (with children between 6 and 9 years of age, Study 4).They observed that deaf native signers children performed better than deaf children of hearing parents and hearing children of hearing parents. This result suggests that even if no difference is observed in adults, age of sign language acquisition and early exposure can influence specifically children cognition. It is then necessary to extend our work to children.

The underlying cognitive processes involved in the trade-off we report, can be diverse and complex but there are at least three hypotheses that can be formulated to account for these results. The first is that sign language may bring changes in visual cognition because it involves motor activity. A few studies have suggested that visual perception/cognition can be influenced by motor activity (Dupierrix, Gresty, Ohlmann, & Chokron, 2009; Herlihey, Black, & Ferber, 2013). It seems unlikely, however, that face processing could be affected by motor activity. The effects of action on visual perception are more likely to be tied to spatial visual cognition (Dupierrix et al., 2009) or early perceptual processing (Herlihey et al., 2013) but not to the elaborate and complex processing required for face recognition.

The second hypothesis is linked to a recent study in typically hearing populations suggesting that bilinguals may differ from monolinguals in how they process and recognize facial stimuli (Kandel et al., 2016). The study found that spoken language bilinguals behave similarly to the deaf and hearing signers in the current study, in that their response times were slower than those of the monolinguals and their accuracy was higher. The authors interpreted their results within a developmental framework, suggesting that early exposure to more than one language leads to a perceptual organization—and therefore narrowing—that goes beyond language processing and could extend to the analysis of face. The signers in our study are bilinguals which may have affected their face processing abilities by the same, or a similar, mechanism. However, in our study the hearing signers were not early bilinguals (except one) and were likely to have learned sign language after the point at which narrowing typically happens (Maurer & Werker, 2014). Alternatively, extensive experience with sign language could be sufficient to induce a shift in the cognitive strategy for face recognition. It would be interesting to know when in the learning process this expertise effect occurs, that is to establish how much signing experience and fluency—is necessary to bring about the speed–accuracy trade-off. It is here impossible to estimate this minimum experience requested because all signers were selected because they were fluent in LSF and also because the LSF scale of fluency used was based on self-report and did not provide information about the real level of knowledge in LSF.

Finally a third hypothesis can be postulated. Faces provide linguistic, syntactic and emotional markers in sign language communication (Brentari & Crossley, 2002; Liddell, 2003; Reilly et al., 1992), meaning that facial expression can modulate or change a sentence meaning. This forces signers to process a richer visual information from faces, which on one side incresases the processing time, but on the other side enhances the encoding. This does not apply to face categorization, which just requires surface processing of the input (general shape, texture, etc.) to allow discrimination between human and non-human faces.

To conclude, this study was made to understand face processing in signers under different aspects. The first and superficial level of face processing—discriminating between human and non-human faces—seems to not be affected by early deafness nor by the use of sign language, strengthening the proposal that this process is resistant to experience. On the other hand, a more subordinate and complex level of face processing—face recognition—differs in skilled users of sign language whether they are deaf or hearing. Our study important and novel insights into the plasticity of face processing, and the face processing abilities of individuals who use a visual-gestural language such as LSF. Further studies are now necessary to better characterize the difference in the decisional strategy used by signers to process faces. For example, zye tracking studies would allow to determine if the shift observed in our study is linked to differential gaze patterns occurring during the extraction of the diagnostic information during face recognition. Electrophysiological (EEG) studies would also provide interesting information about the neural dynamics of face recognition between signers and non-signers. Although past EEG studies on deaf signers and face processing did not find drastic differences in term of the face-sensitive N170 component (Mitchell, Letourneau, & Maslin, 2013; Mitchell, 2017), given our observations it might be relevelant to focus on attentional components related to face processing. In fact, signers might not necessarily process faces differently, but, they might however pay more attention to small differences on facial expression or on facial configuration to accurately understand the sentences meaning and to react properly, which might in turn influence face processing. Altogether, our data bring novel insights on the different way face processing can be achieved and pave the way for futures studies aiming to pinpoint the roots of our observations.

Conflicts of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

R.C. and J.L. were supported by the Swiss National Science Foundation (n° 100014_156490/1) awarded to R.C.

Notes

The HDDM uses hierarchical Bayesian estimation (Markov chain Monte Carlo via PyMC) of the DDM parameters and performs well even with a small number of trials (Ratcliff & Childers, 2015). Hypothesis significance testing was performed on the posterior of the parameter estimations by comparing directly the Markov chain Monte Carlo trace (Kruschke, 2014).